Modern web apps are two things: complex, and under persistent attack. Any publicly accessible web application can receive up to tens of thousands of attacks a month. While that sounds like a reason to immediately pull the plug and find a safe space to hide, these are likely spread across the spectrum of harmless to nefarious. However, that level of exposure cannot be ignored.

According to the Verizon Data Breach Investigations Report (DBIR), in 2020, “67% of all confirmed breaches analyzed in this report came from user credentials being leaked, misconfiguration in cloud assets and web apps, and social engineering attacks.” Of that total, 43% of the breaches came with the primary attack vector being a web application.

At Rapid7, we are always looking for ways to improve our coverage, and while crawling modern web apps is a tricky business, we thought to ourselves, "If I were a web application, what would I tell my friendly neighborhood Spiderman application security professional about where to look for issues?" This was a trigger for some engaging conversation, where we sought to understand what additional resources were readily available to help guide a DAST scan. What resources does a web application provide that we could hook into in order to discover more links when scanning for vulnerabilities?

With the help of Rapid7’s senior director, chief security data scientist Bob Rudis, we found that robots.txt is in use for about 40% of the Alexa top 1 million sites, and sitemap.xml is in use for about 3% of the same apps (virtually all of which use the uncompressed XML version rather than the *.gz)

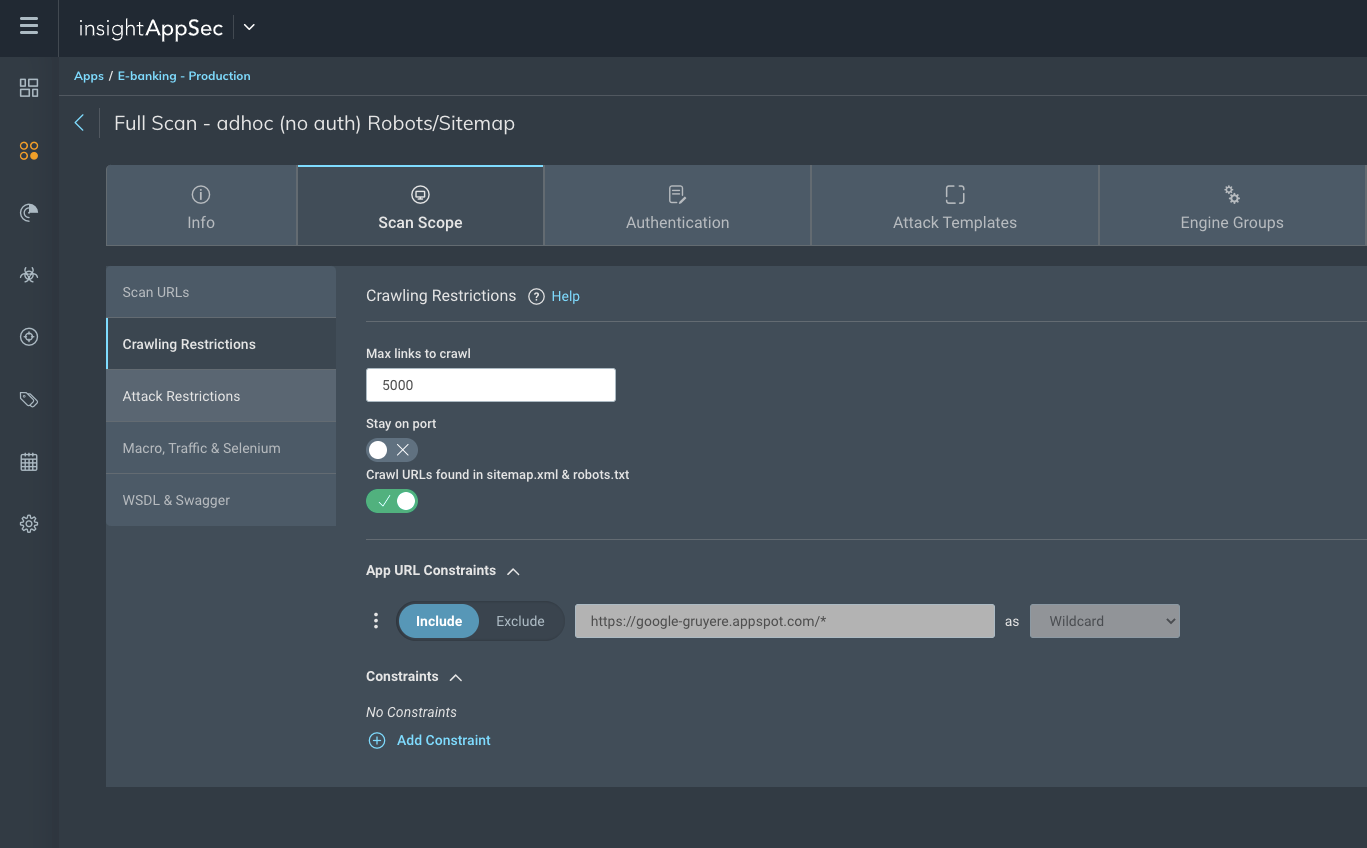

Given the popularity and commonplace of these resources, InsightAppSec has just launched the ability to allow users to opt in to searching for links in these files. If the files exist, we simply grab the links and add them to the path we navigate through a web application looking for vulnerabilities.

Now, I know what you are thinking. "Isn't the point of robots.txt to stop scanners and search engines spidering my sites?" This is a common misconception. The robots.txt file does tell search engine crawlers not to request certain pages or files from your site—but the point isn't to keep them out, it's used to avoid overloading a site with requests. This won't stop a site froom being indexed by a search engine (and if that's your aim, have a look at the noindex directive).

Most importantly, it certainly won't stop an attacker from being nosy if they are doing reconnaissance, either in person or via bots/scrapers/scanners. An attacker won't respect a friendly request not to attack a page, and just as you need to consider the scope of a public web app as fair game for attack —you should mirror that mindset in your approach to securing web apps.

Worried about your web application security? See InsightAppSec in action for yourself with a free trial, allowing access to scan one of your public web apps.

NEVER MISS A BLOG

Get the latest stories, expertise, and news about security today.

Subscribe